Prioritized Sweeping

An interactive animation using p5.js

By Zhihan Yang on August 24th 2020

Environment description

State (0, 0) is the starting state; state (9, 9) is the terminal state. For each position tuple, its first integer is the row index and its second integer is the column index. All transitions yield a reward of zero except those leading into the terminal state, which yield a value of one.

Algorithm

As we’ve seen in a previous post, Dyna-Q has a low planning efficiency, especially for the first few episodes. For an environment where most transitions yield zero reward and where the values of all state-action pairs are initialized to be zero, most state-action pairs have a zero TD error at the beginning. A planning step using a zero TD error is useless because it doesn’t change the value table at all.

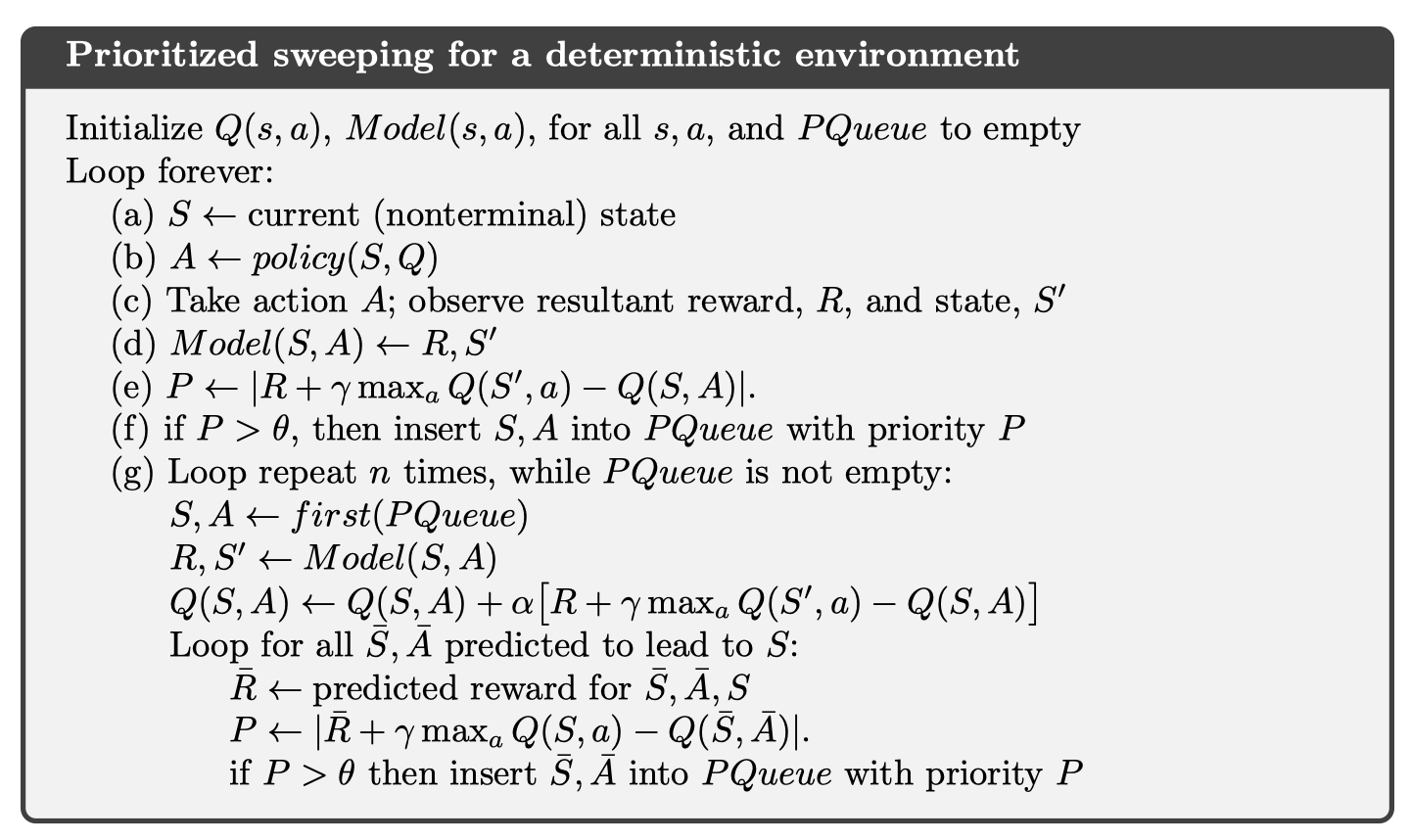

A better way to plan is to work backwards from state-action pairs whose values have been changed. This idea is called backward focusing, which has led to following algorithm called Prioritized Sweeping (PS). To understand it, let’s only consider what happens in the first two episodes using this algorithm with 3 planning steps per learning step.

During the first episode (before the final action is taken), all TD errors are zero. DynaQ does not recognize this problem and thus performs a lot of useless updates (both learning and planning), while PS skips these updates because these TD errors are below its threshold (?). Upon reaching the terminal state, the TD error is for-the-first-time positive. DynaQ immediately takes advantage of this information and performs a learning step, while PS delays the learning step by pushing the state-action into a queue with the TD error as its priority.

After the final learning step, we have 3 planning steps. At this point, most state-action pairs have a TD error of zero. Again, DynaQ ignores this and randomly selects candidates to perform planning steps, resulting in low efficiency. On the other hand, PS first performs the delayed learning step (technically should be called a planning step) by popping the state-action pair with the highest priority, and adds the state-action pair(s) that 1) the agent has seen, 2) is predicted by the model to lead into that state-action pair whose value has just been updated and 3) whose TD error is above some threshold. This ensures that all planning steps are effective because all TD errors used are non-zero.

Note that this scheme of prioritizing updates is probably not the most efficient way (finding the optimal policy in the fastest possible way), but is nevertheless effective.

During the second episode (starts at timestep 1314), PS continues to focus on updating state-action pairs with a non-zero TD error during planning. In the animation below, a frontier with high-priority state-action pairs slowly move towards the origin.

Legend

- Left diagram:

- White squares: states

- Black arrows: argmax actions

- Colorful triangles: action values

- Numbers on colorful triangles: action values

- Red highlight: current state-action pair (selected by either learning or planning

- Green highlight: next state and its argmax action

- Slider: a tool you can use to scroll through all the updates (learning and planning)

- Right diagram:

- Colorful triangles: priority values (TD errors)